Autoencoder

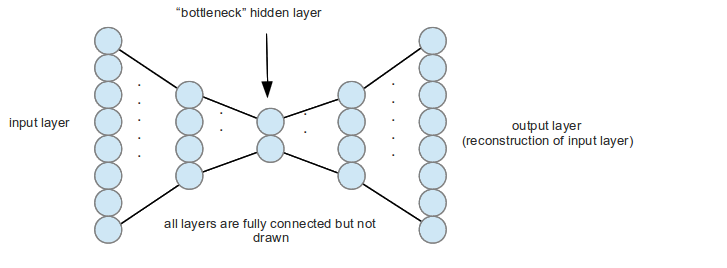

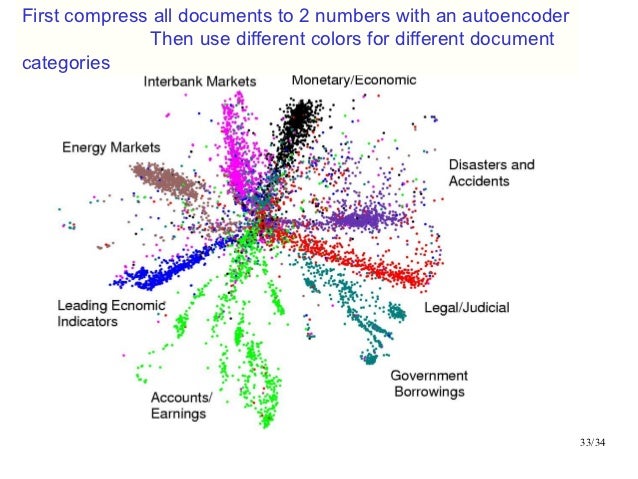

A car encoder is an artificial neural network that is used to learn efficient codes. The goal of an auto encoder is to learn a compressed representation ( encoding) for a set of data and thus also extract essential features. Thus it can be used for dimensionality reduction.

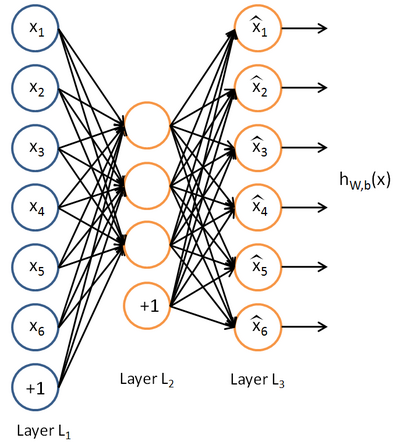

The car encoder uses three or more layers:

- An input layer. In face recognition, the neurons could represent, for example, the pixels of a photograph.

- Some significantly lower layers constituting the encoding.

- An output layer, where each neuron has the same meaning as the corresponding in the input layer.

When linear neurons are used, it is very similar to the main component analysis.

Training

A AutoEncoder is often trained with one of the many variants of backpropagation ( CG method, gradient, etc.). Although this method is often very effective, there are fundamental problems with training neural networks with hidden layers. If the errors once rückpropagiert to the first few layers, they are insignificant. This means that the network is almost always learn to get the average of the training data. Although it (such as the conjugate gradient method) are advanced back-propagation methods that remedy this problem to some extent, this method boils down to slow learning and poor results. To remedy this, one uses initial weights that already correspond to the result about. This is called Pretraining.

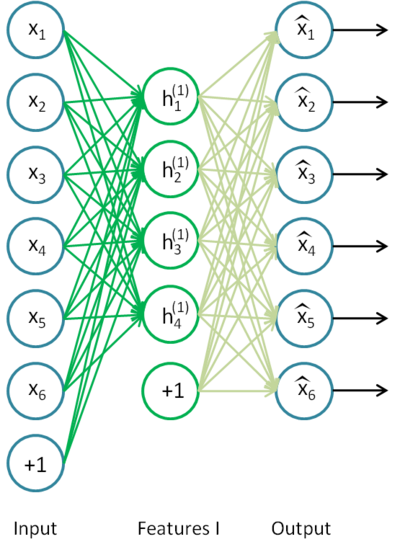

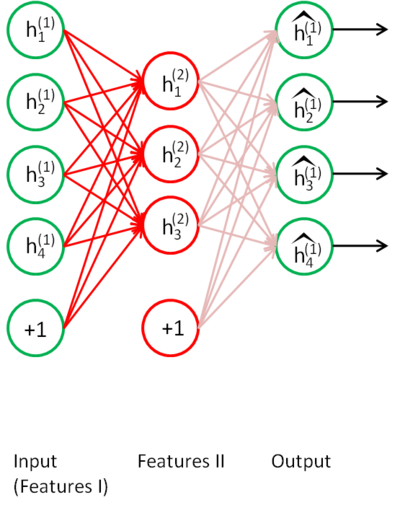

At a Pretraining technique that was developed by Geoffrey Hinton to train multi-layer AutoEncoder adjacent layers are treated as limited Boltzmann machine in order to achieve a good approximation, and using back propagation as a fine-tuning.