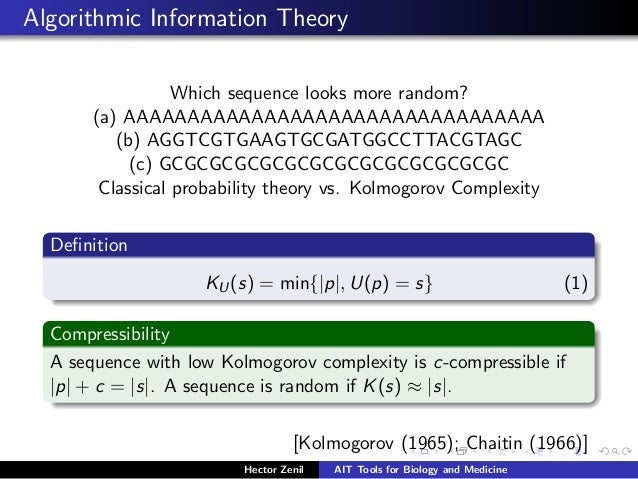

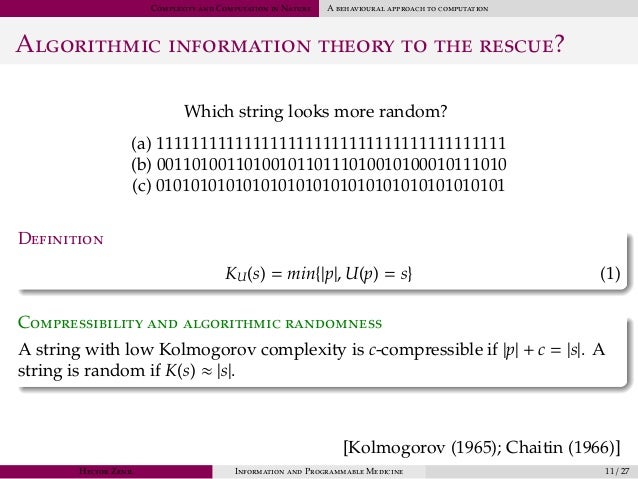

Algorithmic information theory

The algorithmic information theory is a theory in theoretical computer science that uses the Kolmogorov complexity to determine the information content in contrast to classical information theory. It was developed mainly by Gregory Chaitin, Andrey Kolmogorov and Ray Solomonoff.

Describing the information content of a character string, the algorithmic information theory considers the size of the smallest algorithm that generates the string. Gregory Chaitin clarified this well-known as Kolmogorov complexity size by a special machine model on which the algorithm must be executable. How could prove Chaitin, the algorithmic information content of a string can not definitively indicate, as it is not provable, whether a given program is to produce it really is the shortest. Just as the concept of information by Claude Shannon, the algorithmic information theory makes no representations about meaning, knowledge, and similar non- mathematically defined terms.

Example

According to the classical definition by Claude Shannon, the information content of the following binary sequence is equal to ( applies only to first-order entropy ):

While the first episode was produced by coin toss as random, the second episode, however, can be " 8x1 then 8x0 " shortened by the statement. In terms of algorithmic information theory, the first episode therefore more algorithmic information because it may be much more difficult or even not be shortened. The algorithmic information is higher, the less a string can be compressed ( among others through data compression). Random number sequences and white noise contained no predictable pattern in the rule and are therefore not compressible - the algorithmic information content is therefore higher.

Mathematical Background

The approach of Andrei Kolmogorov allows programs as algorithms for arbitrary Turing machines. Gregory Chaitin is the Kolmogorov complexity of the theory of recursive functions ( See also μ - recursion, lambda calculus ) and the work of Kurt Gödel in relationship. It limits the possible programs to those that run itself again on a special variant of the universal Turing machine ( UTM), on the so-called self -limiting universal Turing machine.

By the proof of Chaitin but can not always be determined whether a string can be further shortened algorithmically. Thus, new and more effective compression algorithms can be found, for example, or a seemingly random number sequence can originate from a pseudo-random number generator. Because of the problem can not hold all Turing machine, which are smaller than the signal be sampled in a finite time.