Backpropagation

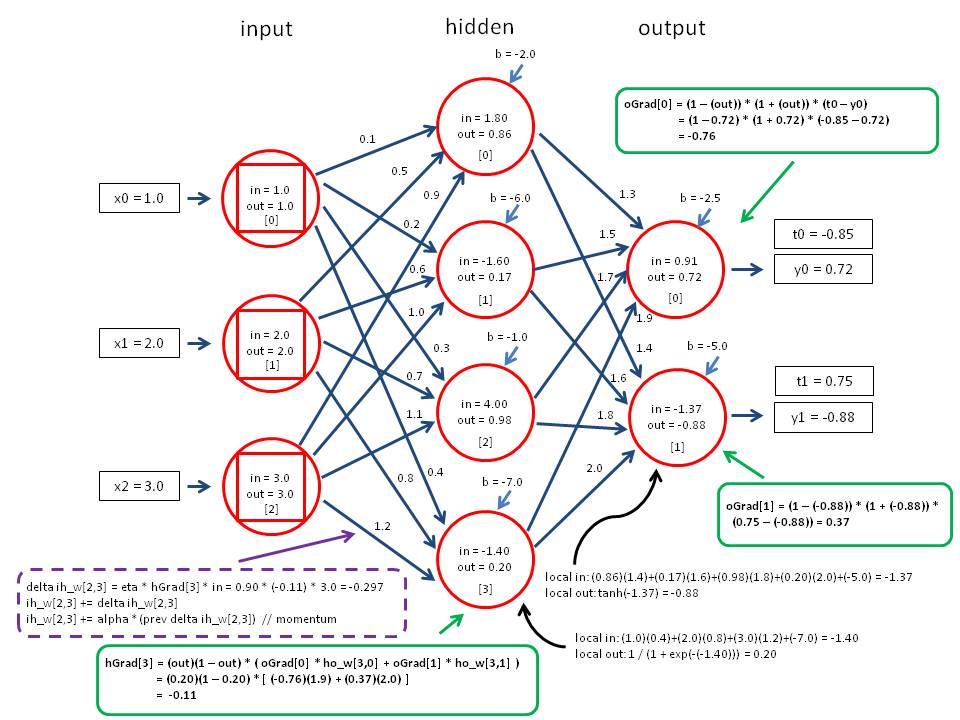

Back propagation or backpropagation of error and also error feedback ( also back-propagation ) is a common method for teaching artificial neural networks. Formulated by Paul Werbos it was first in 1974. It was known, however, only in 1986 through the work of David E. Rumelhart, Geoffrey E. Hinton and Ronald J. Williams and led to a " renaissance" of the research of artificial neural networks.

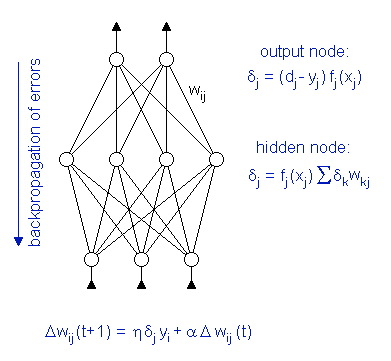

It belongs to the group of supervised learning method and is used as a generalization of the delta rule to multilayer networks. For this purpose, there must be an external teacher who at every moment of entering the desired output, the target knows. The backpropagation is a special case of a general gradient method in the optimization based on the mean square error.

- 4.1 backpropagation with variable learning rate

- 4.2 Backpropagation with inertia term

Error minimization

When learning problem with an accurate picture of the given input vectors is aimed at given output vectors for arbitrary networks. To the quality of the image is described by an error function, which is here defined by the squared error:

.

It is

The factor is in this case added only taken to simplify the derivation.

The objective now is to minimize the error function, but in general only a local minimum is found. The teaching an artificial neural network is performed at the back-propagation method by changing the weights, since the output of the network - not only on the activation function - is directly dependent on them.

Algorithm

The back-propagation algorithm involves the following phases:

- An input pattern is created and propagated forward through the network.

- The output of the network is compared with the desired output. The difference of the two values is considered an error of the network.

- The error will be propagated back through the output to the input layer back. The weights of the neuron connections are changed depending on their influence on the error. This guarantees at a reapplication of input an approximation to the desired output.

The name of the algorithm results from the Back propagation of error ( engl. error back-propagation ).

Derivation

The formula of the back propagation method is derived by differentiation: The output of a neuron, depending two inputs and one obtains a two-dimensional hyper-plane, wherein the error of the neuron depends on the weights of the input and the input. This error surface contains minima that need to find it. This can now be achieved by the gradient method, by starting from a point on the surface is descended in the direction of the strongest drop in the error function.

Neuron output

For the derivation of the back-propagation method, the output neurons of an artificial neuron is shown briefly. The output of an artificial neuron can be defined by

And the power input by

It is

A threshold value is omitted here. This is usually realized by a more " -firing " on- neuron and the weight corresponding to that occupied with the constant value 1. In this way, one unknown is eliminated.

Derivative of the error function

The partial derivative of the error function is obtained by using the chain rule:

Of the individual terms of the following formula can now be calculated. The derivation in contrast to the simple delta rule depends on two cases:

Specifically:

It is

Modifying the weights

The variable shall in the differentiation of neurons: If the neuron in a hidden layer is used, its weight is changed depending on the failure, the generating the subsequent neurons, which in turn obtain their inputs from the considered neuron.

The change of the weights can now be made as follows:

It is

Extension

The choice of the learning rate is important to the process, since too high a value causes a large change, the minimum may be missed, while too small learning rate slows down the learning unnecessarily.

Various optimizations of backpropagation, such as Quickprop, primarily aimed at the acceleration of the error minimization; other improvements especially trying to increase reliability.

Backpropagation with variable learning rate

To an oscillation of the network, ie to avoid alternating connection weights, exist refinements of the procedure, which is performed at a variable learning rate.

Backpropagation with inertia term

By using a variable inertia term ( momentum ) of the gradient, and the last update can be weighted so that the weight adjustment additionally depends on the previous change. If the momentum is 0, the change depends only on the gradient off at a value of 1 only by the last change.

Similar to a ball rolling down a mountain and its current speed is determined not only by the current slope of the mountain, but also by their own inertia, can the backpropagation Add an inertia term:

It is

Thus, the current weight change depends on both the current gradient of the error function (slope of the mountain, first summand ), and from the weight change of the previous time point from (own inertia, second summand ).

Due to the inertia term, among other problems of the back-propagation rule can be avoided in steep ravines and flat plateaus. For example, since in flat plateaus of the gradient of the error function is very small, it would without inertia term directly to a " slowing down " of the gradient descent, this " braking " is delayed by the addition of the inertia term, so that a flat plateau can be overcome quickly.

When the error of the network is minimal, the learning can be completed and the multi-layer network is ready to classify the acquired pattern.