Time complexity

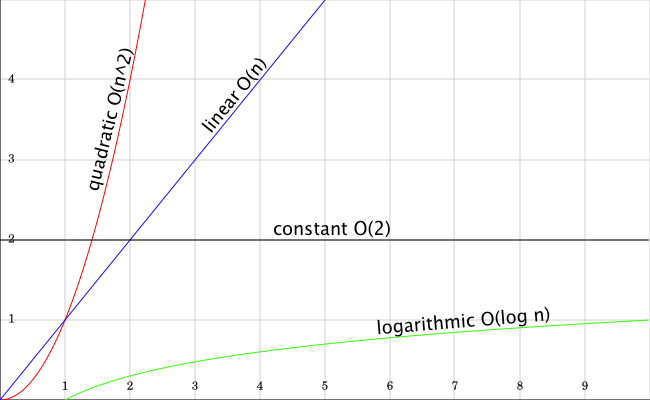

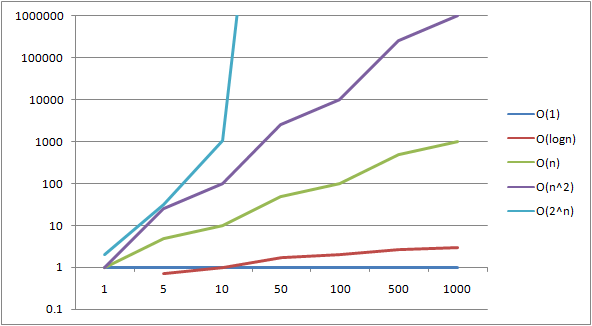

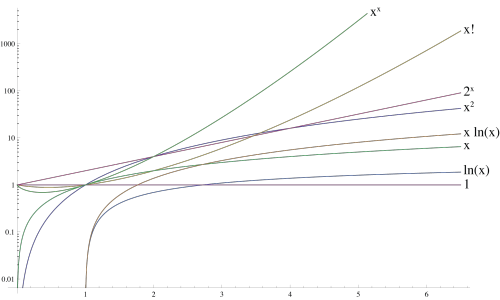

Of the time complexity of the problem is to be understood in the data processing, the number of calculation steps required for an optimal algorithm for solving this problem, depending on the length of the input. It is spoken here also of the asymptotic running time and means by that, on the basis of an asymptote, the time behavior of the algorithm for a potentially infinite amount of input. It is interested that is not the amount of time a specific program on a specific computer, but much more as the time required grows as more data are to be processed, eg whether the effort for the double amount of data doubled or squared ( scalability ). The term is therefore listed as a function of the length n of the input and estimated asymptotically for growing n using the Landau notation ( big-O notation). A more detailed runtime estimation of recurrence equations also offers the Mastertheorem or the substitution method.

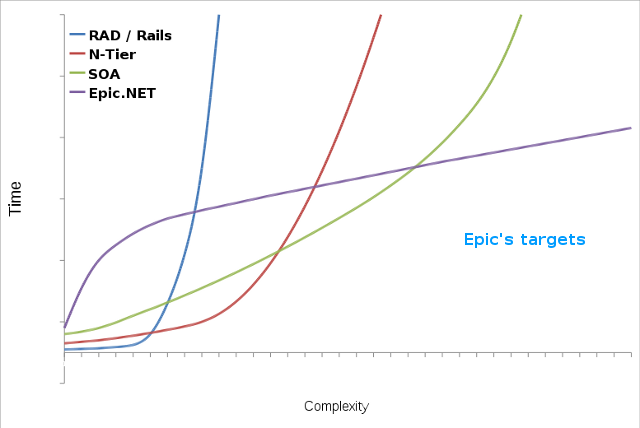

If the term of the time complexity with respect to a specific algorithm, as so is the number of steps meant that requires the algorithm for processing an input of a certain length n ( in the best, worst or average case, see below). In the computational complexity of the actual object of observation is, however, the complexity of problems. The algorithm complexity is only interesting insofar as it statements about the problem treated can be closed. In practice, however, is often interesting, especially to select an appropriate algorithm for a specific context: So Bubblesort is true for large data sets a fairly slow process, but is due to the low overhead suitable for small amounts of data (especially for n ≤ 8 ).

The time complexity is always given in relation to a machine model. In general, the reference model is the Turing machine, alternatively, the time complexity but also be specified in relation to a register machine, which more closely resembles the actually existing computers in their behavior. Parallel algorithms such as a parallel machine model the PRAM can be used. In addition, a distinction is made between the complexity of deterministic and non-deterministic machines.

In complexity theory, the time complexity is in addition to the space complexity of the most studied aspect of algorithms and problems. The time complexity of all algorithms that solve a problem, for example, is a series of significant complexity classes based.

Variants

There are the following variants at run time estimation:

- The worst-case running time ( engl. worst case) specifies how long the algorithm takes at most. For many algorithms, there are only a few inputs that reach the worst-case running time, which is why it is not necessarily a realistic estimate. But if it is for real -time systems, the worst-case running time must of course be taken into account.

- The average -case running time ( engl. average case ) is the expected life of at a given distribution of inputs. However, since the distribution of inputs for programs is not always known, the calculation of the average -case running time in these cases is possible only under restrictive assumptions. See also: Amortized running time analysis

- The best-case execution time ( engl. best case ) specifies how long the algorithm takes at least, so even for the inputs on which he is working perfectly. This lower bound for the solution of the problem is rarely indicated, since it applies only to a few cases and the best-case runtime is included for the worse cases in the.

Dependent time complexity

Most of the time complexity can only be specified as a function of different time complexities. So at sorting algorithms, time is measured not really directly but in " Compare Operations", assuming that each such comparison requires a constant time. While the normally applies to basic data types, this is, for example, strings already not. If two algorithms ( in this example, two sorting algorithms ) but perform similar comparisons, the result is still meaningful.

In some cases, even just as a definitive statement can be made. For example, the algorithm DBSCAN for each point exactly one neighborhood by request. As a neighborhood as the request is valid " slowest " operation in the algorithm, it is also said to be "linear" ( in the number of neighborhood requests). However, If you want to compare which performs no neighborhood requests it with an algorithm, one needs a different level. However, depending on an existing index structure a neighborhood query can be answered at different rates ( and that does not change the algorithm DBSCAN ). Without index support DBSCAN then has a "square" time complexity ( in the number of distance calculations ).

Example

In a list of twenty names are stored. It should now be entered and compared the existing name. The list, starting with the first entry is searched until the name is found.

- Best case: The required name is the first in the list, the search time is 1

- Worst case: The required name is the last in the list, the search time is 20 This search time would also be set if the name would not be on the list.

- Average case: If the name is definitely on the list, is the average case 10.5.

Simply Speaking of time complexity, as is usually the estimate for the worst-case scenario meant.

For a realistic estimate of the run time is suitable for complex algorithms, amortized analysis, which considers the average cost of the algorithm for all possible inputs, rather than just for the best / worst / uniformly distributed case. What is crucial is how likely it is that a certain event occurs. This type of analysis is particularly useful when considering the cost of a partial operation of a larger algorithm: When sorting by a Fibonacci heaps, for example, the operation of Einsortierens a new entry, although in the worst case is rather complex, but these only come once during the passage of the overall algorithm that in all steps of the heap is already " almost sorted ", and the single step is cheap. The problem with the amortized analysis is that it is not easy to perform, since you first have to develop a function to track the models the behavior of the data structure, and therefore indicates how likely the individual cases.

In information theory, the time complexity is used to determine the depth Algorithmic a dataset.