Joint probability distribution

The probability distribution of a multi-dimensional random variables are called multivariate distribution or multidimensional distribution.

Introductory Example

We consider two random experiments.

The first consists of a two-time cubes with an ideal cube. This is equivalent to an urn experiment with six distinct spheres, being twice drawn with replacement. There are 36 possible outcome pairs (since we consider the order of the dice or the draw ), and all 36 options are equally likely, so have a probability of 1 /36.

The second experiment is now to be a similar urn experiment, but without replacement. In this case, the results of (1,1), (2,2), ..., (6,6 ), do not occur, since the i-th ball in the second drawing can not occur, if it has been taken out already during the first draw. The remaining 30 pairs are equally likely and therefore have probability 1 / 30th

These two experiments give now two-dimensional discrete random variables which have the same marginal distributions ( any number from 1 to 6, in two experiments in two draws equally likely and occurs with probability 1/ 6 ).

However, the two contractions in the first experiment are independent, since the drawn ball is replaced, while they are not independent in the second experiment. This is most evident when one realizes that the pairs (1,1), (2,2 ), ..., ( 6.6 ) must occur with probability 1/36 in an independent experiment ( product of the edge probabilities 1 / 6), but they may not occur at all in the second experiment (probability 0 have ) because the ball is not replaced.

The distributions from, and are different; so it is an example of two different discrete multivariate distributions with the same marginal distributions.

Two-dimensional distribution function

The distribution function for a two-dimensional random variable Z = ( X, Y) is defined as follows:

If the observed random variable Z is a (two-dimensional ) density fX, Y, then the distribution function

If the random variable is discrete, then we can write the joint distribution conditional probabilities using this:

And in the continuous case corresponding to

Here, fY | X ( Y | x), and fX | Y (x | y) is the conditional densities ( y under the condition x = x, and X under the condition of Y = y) and fx (x), f y ( y) are the densities of the marginal distributions of X and Y.

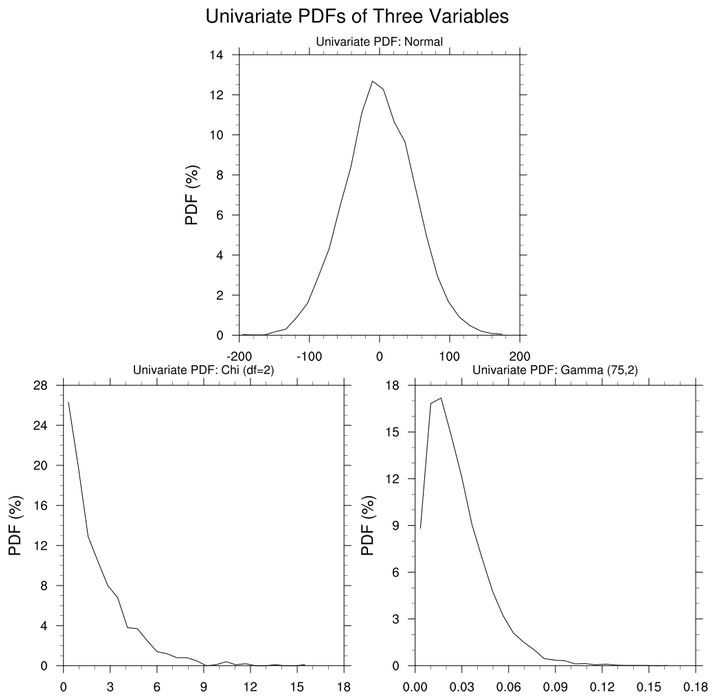

In the figure, an example of the modeling of the dependence structure using copulas is shown. In particular, this is an example that a bivariate random variable need not be normally distributed with a bivariate normal marginal distributions.

The general multi-dimensional case

Does the n- dimensional random variable has a density, then the distribution function is analogous to the two-dimensional case

There are more opportunities for marginal distributions as in the two-dimensional case, because now marginal distributions for each lower dimension exist and you have options to select the subspace. For example, there are one-dimensional and two-dimensional marginal distributions in the three -dimensional case 3.

Joint distribution of independent random variables

When X and Y discrete random variables X and Y for all valid, or for continuous random variables for all x and y, then independent.